AWK in brief

Introduction

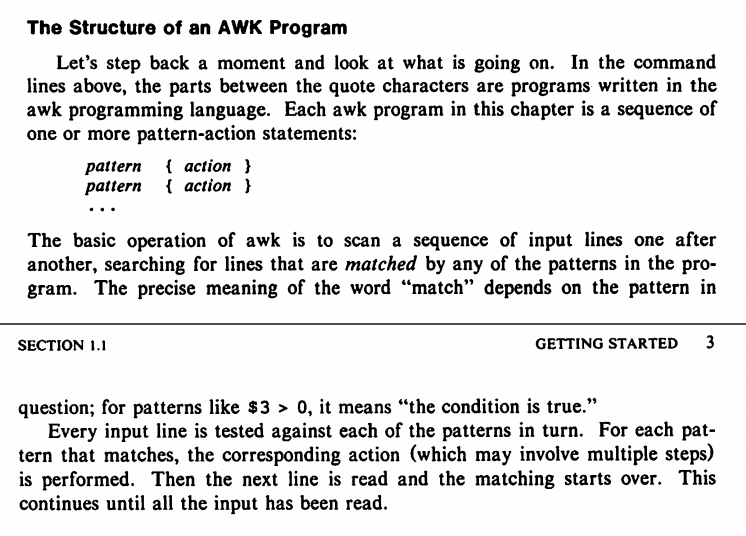

AWK is a multi purpose software tool mainly used as a filter, that is a program to read input and produce output. Input is readed in lines and every line is split into fields. After reading and splitting each line into fields, AWK can take some action e.g. print, count etc, according to patterns matched by the line readed. The following excerpt (pages 2-3) from the famous book "The AWK Programming Language" shows the elegance of AWK:

In other words every AWK program is a series of pattern/action items. AWK reads input

one line at a time, checks each line against the specified patterns and takes the correspondig actions

for the matching lines.

Default action is "print the line", while missing pattern "matches" every input line,

meaning that an action without a pattern will be taken for every single line of input.

On the other hand, a pattern without an action causes matching lines to be printed,

while non matching lines will be whipped out.

There are two extra "patterns", namely BEGIN and END,

for actions to be taken before any input has been read and after all input has been read.

That's all!

Here is a simple example taken by an interview of Brian Kernighan, one of the creators of AWK. We have volcano eruption data in a file. Each line consists of three columns separated by tab characters. First column is the volcano name, second column contains the date of the eruption, while third column is the magnitude of the eruption in a scale from 1 to 6.

Given that specific file of volcanic eruption data, we can do some interesting things with AWK just by using one-liner AWK programs. Let's say the data file is named vedata. The following will print eruptions of magnitude greater than 4:

awk '$3 > 4' vedata

In this case we used AWK as a filter on eruption magnitude. Our program consists of just one pattern, that is check for magnitude to be greater than 4. Action is missing, which means print (default action) the matching lines.

Now lets' print all eruptions of volcano "Krakatoa", filtering data by volcano name:

awk '/Krakatoa/' vedata

or more precisely:

awk '$1 == "Krakatoa"' vedata

Assuming that eruption dates are formated as YYYYMMDD, e.g. 18830827 is August 27 1883, we can print all eruptions of year 1996:

awk '($2 >= 19960000) && ($2 < 19970000)' vedata

or

awk '($2 > 19959999) && ($2 < 19970000)' vedata

We can produce some amazing results by writing just a few lines of code. Let's say we want to print total eruption counts by magnitude:

awk '{

count[$3]++

}

END {

for (i in count) {

print i, count[i]

}

}' vedata | sort -n

Now let's print eruption count by year of eruption:

awk '{

count[int($3 / 10000)]++

}

END {

for (i in count) {

print i, count[i]

}

}' vedata | sort -n

History

AWK is a program developed in Bell Labs of AT&T in the late 1970s as a general purpose tool to be included in the UNIX toolset. The name AWK is derived from the names of its creators: Al Aho, Peter Weinberger and Brian Kernighan.

From the first days after its birth, AWK has been prooved to be an invaluable tool

and has been used in more and more shell scripts and other UNIX tools.

After GNU project creation, AWK was significantly revised and expanded,

eventualy resulted in gawk, the GNU AWK implementation

written by Paul Rubin, Jay Fenlason and Richard Stallman.

GNU AWK is being maintained by Arnold Robbins since 1994.

Over the years, there have been implemented other versions of awk as well.

Brian Kernighan himself developed nawk, which has been dubbed the one-true-awk;

nawk has been used in many operating systems e.g.

FreeBSD, NetBSD, OpenBSD, macOS and illumos.

Another well known AWK implementation is mawk, written by Mike Brennan,

now maintained by Thomas Dickey.

awk is no more in use because of gawk's increased functionality.

Most of modern operating systems offer plain awk as another name (link) of gawk.

In my current Linux system there is a symbolic link of

/usr/bin/awk to /etc/alternatives/awk which in turn is linked to

/usr/bin/gawk.

However, every piece of software must be constantly evolving to reflect modern trends in

software or hardware development and AWK is no exception.

Over the years there were many aspects to be handled by awk/gawk

maintainers, e.g. internationalization, localization, wide characters, need for extensions,

C‑hooks, two-way communications etc.

Last improvements of gawk are about dynamic extensions,

but there are more improvements to be done in the future.

Basics

To use AWK effectively, one must know the core loop of AWK processing and some basic concepts affecting AWK's view of lines and columns. There are few handy command line options which may prove indispensable to know. Basic knowledge of regular expressions is also needed to use pattern matching the right way. It's also good to know how to redirect AWK's standard output to files or pipes; you can also redirect standard input or standard error. All of the above form a good background to start using AWK effectively, but you can also make beneficial use of AWK with much less, as long as you know what you are doing.

One thing that must be clear before using AWK is the execution road map

of every AWK program:

AWK executes some initialization code given in the BEGIN

section (optional).

Then, follows the so called core loop; AWK reads input one line

at a time and checks each line against given patterns (optional).

By the word line we usually mean one line of input, but you can change that

by setting RS variable to any single character or regular expression,

e.g. if RS is set to "\n@\n", then each line may contain

more than one lines of input, that means anything between lines consisting

just of a single "@" character is considered a record.

If a line (record) matches a pattern, then the corresponding action is taken.

A next command in a taken action causes all remaining pattern/action

entities to be skipped for the line at hand and the next input line is readed.

The same process is repeated until no more input lines remain.

After all input lines have been readed (and processed),

the END action is executed (if exists) and AWK exits.

Lines, Records and Fields

Actually, RS means record separator, so it's more

accurate to say that AWK reads input one record at a time,

than the usual line by line.

However, due to default behavior of AWK, the words record and

line are used interchangeably in the AWK's jargon.

Let's see the use of RS using input consisting of login names,

followed by email addresses and phone numbers.

Each user name ends with a single "@" character:

panos panos1962@gmail.com 0030-2310-999999 @ arnold arnold@yahoo.com 0045-6627-999999 @ mike mike@awk.info 0020-2661-999999 @ maria maria@baboo.com 0035-9932-999999

We want to construct a spreadsheet file of two columns, namely the login name

and the phone number. We can achieve this task with AWK, just by setting "@"

as record separator, tab character as output field separator and pipping the results

to ssconvert:

awk 'BEGIN {

RS = "\n@\n" # records separated by "@" lines

OFS = "\t" # set tab character as output field separator

}

{

print $1, $3 # print user name and phone number, tab separated

}' user | ssconvert fd://0 user.ods

That was less than 10 lines of AWK code, but the following one-liner would be suffice:

awk -v RS='\n@\n' -v OFS='\t' '{ print $1, $3 }' user | ssconvert fd://0 user.ods

Note the use of -v command line option in order to set the record

separator (RS) and the output field saparator

(OFS) in the command line.

We talked earlier about the RS variable, but what is the OFS?

We can print output using the print command in AWK.

To print many items, side by side, we can specify the items just after the

print command. In this case, the items are printed one after the other

without any kind of separation in between.

Using the comma separator between items to be printed, causes AWK to print the

items one after the other, although separated by spaces.

If we want the sparator to be something else, then we can do so by setting the

OFS variable to the separator wanted:

awk -v OFS='<<>>' '{ print $1, $NF }'

Assuming input consists of lines, were each line starts with the user's name, and

ends with the user's country, the above AWK one-liner will print the first and last

fields of every input line separated by "<<>>":

panos<<>>Greece maria<<>>India arnold<<>>Israel mike<<>>England ...

As you may have already guessed, NF variable is the columns' count

of the current input line (record).

Just after reading each input line, AWK counts the fields (coulumns) of the line

and set the NF accordingly.

We can then refer to each field as $1, $2, …$NF,

while fields are separated by AWK by the means of the FS

variable.

FS stands for field separator and by default is any number of

consequent space and tab cracters.

If input is a CSV file, then we must set FS to ",",

while if input fields are tab separated, then we must set FS

to "\t".

Regular Expressions

Regular Expressions (RE) are tightly coupled with AWK because AWK talks the language of REs out of the box, e.g. in the following AWK script the pattern sections are just RE matches:

BEGIN { ascetion = bsection = 0 }

/^[aA][0-9]+/ { asection++ }

/^[bB][0-9]+/ { bsection++ }

END { print asection, bsection }

The first pattern is a regular expression match as the two slashes (/) denote.

Actually the first pattern means: $0 matches ^[aA][0-9]+ regular expression.

But what exactly does this mean? In the RE dialect the ^ denotes the beginning

of the checked item (in this case the checked item is the whole line, so the ^

symbol denotes the beginning of the line), followed by the letters a and

A enclosed in brackets, which means one of the two letters a

or A, followed by a string of numerical digits (at least one).

This RE matches the following lines:

a1 This is a… A1.1 In this chapter… A29 All of the… a30B12 Any of these…

We've emphasized the matched part of the line.

The second pattern is similar, but the first letter must be b or

B for the line to be matched.

Now let's say that we want the above REs to be matched

by the second field instead of the whole line.

There comes ~, the so called match operator, to rescue:

BEGIN { ascetion = bsection = 0 }

$2 ~ /^[aA][0-9]+/ { asection++ }

$2 ~ /^[bB][0-9]+/ { bsection++ }

END { print asection, bsection }

The above script is readed as: Before reading any input, set asection

and bsection counters to zero.

For lines where the second field begins with a or A followed

by at least one numerical digit, increase asection by one.

For lines where the second field begins with b or B followed

by at least one numerical digit, increase bsection by one.

After processing all of the input lines, print asection and

bsection counters.

I/O redirection

It's very easy for AWK to redirect input and output.

Actually, redirecting I/O in AWK is very similar to shell I/O redirection:

AWK and shell use the same redirection operators, namely ">"

for output redirection, "<" for input redirection,

">> for appending data to files and

"|" for piping data to another running program.

Warning!

Because it's so easy for AWK to redirect output to files, you must know

what you are doing or else you are risking your files and data!

Let's say we want our program to filter input lines as follows: All lines with 3d column value less than a given number (min) to be collected to a file, lines with 3d column value greater than another given number (max) to be collected to another file, while all lines with 3d column value between min and max to be printed to standard output. Here is the AWK program to run this task:

$3 < min { print >less; next }

$3 > max { print >more; next }

{ print }

Assuming the data file is named data and the above program is stored to

filter.awk file, we can create files less10 and

more20 by running:

awk -v min=10 -v less="less10" -v max=20 -v more="more20" -f filter.awk data

Conclusion

Nowdays there exist plenty of amazing software languages, tools, frameworks, APIs etc for handling almost every need arising in our full computerised world, from simple calculations, to outer space communications, nanorobotics and machine learning. There is no single piece of software to meet every computer need out there, but AWK is a handy single program found in every computer system, standing there listening to your needs without asking for tons of supporting software, neither asking for super extra high payed experts on software engineering, nor for supercomputer sized machines in order to carry out from the simplest to the most complex tasks, just by following a few lines of code.